“Once upon a time . . .” Setting the context and storyline is important, but promoters of human and institutional capacity development (HICD) need a strong punch line. Indicators are required: output, outcome, impact and process. Participants in the “HICD Storytelling Workshop – from design to evaluation and back again” – spent an afternoon identifying solutions for HICD challenges and developing indicators to tell the story of successes in overcoming challenges.

For the most part, the HICD challenges revolve around the lack of practical skills. These challenges involve students who upon graduation don’t have the skills to get a job or do the work for which they were hired, members who lack the pedagogical capability to transfer those skills, and the organizational, institutional or systemic infrastructure that inhibit sustained improvements in agricultural education and training (AET). Addressing these challenges initially captivated workshop participants, but they were soon immersed as small groups in the intricacies of storytelling.

Participants were organized in small groups and assigned the task of coming up with solutions to these HICD problems. Some groups co-created iterative, adaptive management projects for collaborative group learning; others moved straight away to implementing technical solutions for improving curriculum and pedagogy. While the challenges and solutions could be individual, organizational, institutional or systemic, it was important to specify not only who benefited, but also who needed to be informed about accomplishments.

Nevertheless, storytelling began by identifying indicators for (what was done) and outcome (what short-term change occurred) indicators. These indicators necessarily involved quantitative measures to compare experiences. Comparisons are critical for measuring (1) progress over time, (2) differences between target groups (gender, ethnicity, etc.), and (3) alternative ways of achieving similar goals. Quantitative measures also facilitated aggregation across multiple projects for donor reporting. However, not all indicators need to be standardized for donor aggregation; customized indicators adapted to local conditions could also be valuable for local learning.

As the afternoon progressed, impact (sustained long-term change) and process (program implementation) indicators were developed and polished. However, discussions among and between groups began noting difficulties in telling their stories. Participants found both contexts and solutions as complex and not amenable to the simplification of quantitative measures. The most precisely measured indicators often failed to meaningfully communicate the valuable lessons learned. Dynamic AET learning processes were rendered lifeless. Indicators of a qualitative nature were necessary. It was further recognized that it is often difficult to predict the consequences of a project. Initially defined indicators may entirely miss unexpected outcomes and impacts, both positive and negative.

Improving the quality of an intervention and how it was achieved involves tailoring the message and being responsive to concerns of the target audience. The lessons learned about how change occurs are very important to local stakeholders. Attention to local priorities in quality assurance (QA) was as critical as informing donors about the extent of progress. Characterizing local processes serves two purposes: providing locally meaningful feedback, and distinguishing between what was replicable and what was unique to the situation.

Communication what a project accomplished goes beyond what can be predicted and pre-packaged for donor consumption. Indeed, the value and sustainability of achievements can be effectively demonstrated through indicators of local confidence and acceptance. Flexibility is required to account for the unexpected. Often, it is not so much the number but the directionality of change that is the indicator. Workshop participants concluded that, on their own, indicators don’t really tell the story. Narratives are needed to supplement static indicators highlighting the qualities of a “sleeping beauty” or recounting one of “Aesop’s” lessons learned.

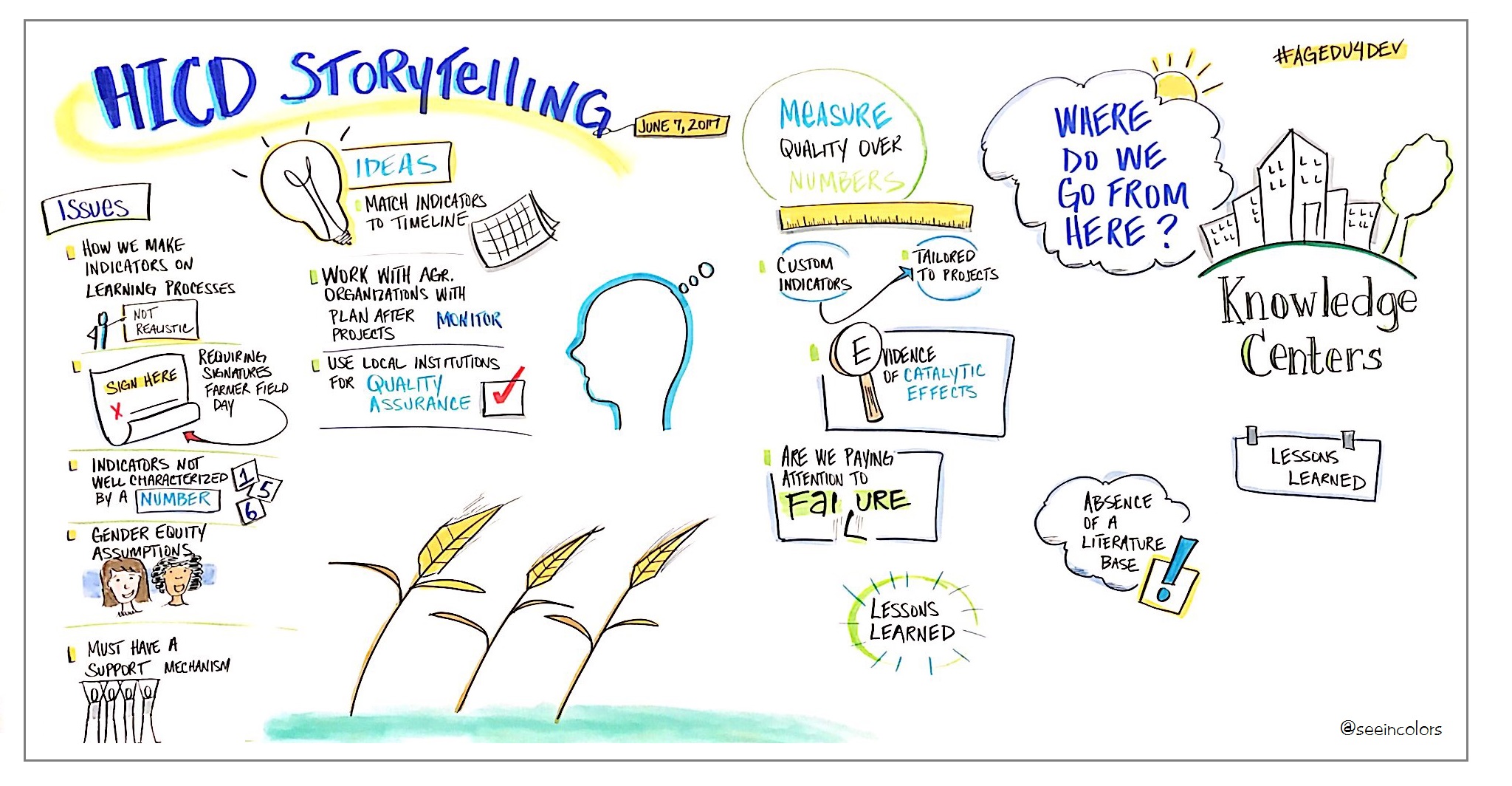

A visual summary made by Lisa Nelson from See in Colors during the Pre-Symposium Workshop.

A visual summary made by Lisa Nelson from See in Colors during the Pre-Symposium Workshop.

Keith M. Moore is retired but continues to contribute to the USAID-funded Innovation for Agricultural Training and Education (InnovATE) project led by Virginia Tech. He has held many positions at Virginia Tech. Most recently he was director for performance assessment for the Office of Research, Education and Development and the director of the InnovATE project.